Demartek Commentary — Horses, Buggies and SSDs

3 June 2013

By Dennis Martin, Demartek President

As the calendar turned to the year 1900, horses were a part of everyday life and a familiar site in both urban and rural settings. The wagon, carriage and buggy business was doing well. Horse-drawn vehicles were common and were the predominant form of personal transportation for people and small amounts of cargo. Although “horseless carriages,” also known as automobiles or motorcars, had been available for a few years, they were expensive and purchased primarily by the wealthy. The early automobiles had reliability problems and often broke down, but a young industry had been launched, and the early adopters were excited about the new technology.

By 1909, the automobile industry was vibrant and growing. In its January 16, 1909 edition, Scientific American, a weekly publication at that time, devoted the entire edition to the automobile. This edition included articles entitled “The Commercial Truck vs. the Horse,” “How to Convert a Horse-drawn Buggy into a Motor Buggy for Less Than $300,” and “A Handy Testing Chart: What to Do When the Engine Stops.” Manufacturers touted the hill-climbing abilities of their cars and began to reduce the weight of these vehicles.

By 1915, the horse-drawn carriage trade held what turned out to be its last large convention and trade show in Cleveland, Ohio. Some organizations began to increase advertising promoting the benefits of using horses for transportation, noting that they were economical to use. But others in the carriage trade, seeing the transformation occurring in their industry, were embracing the automobile and had added components for automobiles to their lines of business. For a time, these companies supplied parts to both the horse-drawn carriage and the automobile industries.

By 1930, sales of horse-drawn carriages had plummeted by more than 90% from the year 1900. By this time, the infrastructure to support automobiles such as paved roads and fuel stations had emerged. Most of the horse-drawn carriage makers that did not transition to the automobile did not survive.

Mass production and the assembly line were revolutionizing this new automobile industry, bringing down the price of its products to affordable levels for a large number of consumers. When Henry Ford introduced the Model T in 1908, it sold for less than half the price of many other cars available at the time. By improving the mass production and assembly line techniques, Henry Ford was able to increase the production volume of the Model T, reducing its selling price by 40% within six years and by more than 60% by the 1920’s. The higher volumes and lower prices, along with improving reliability, increased customer acceptance allowing this higher volume and lower price cycle to feed on itself.

Today, horse-drawn carriages and sleighs are still available, but are used more for novelties for children or romantic rides in the park that hearken back to yesteryear. Automobiles that are well-maintained provide several years of reliable service, achieve high enough speeds for typical travel needs, and are loaded with safety, comfort and convenience features.

I believe that the computer storage industry is in a similar transition period to the one experienced in the transportation industry between 1900 and 1930, particularly as it relates to hard disk drives (HDDs) and solid state storage devices. HDDs have existed for more than 50 years, and certainly hold the vast majority of today’s online data. But something different is advancing, fundamentally changing the way that we store data.

Solid state storage, in all its various form factors, is emerging as a good candidate to replace hard disk drives at some point in the future — perhaps not for all use cases, but for many of them. In this article, the use of the solid state drive (SSD) acronym will represent any solid state storage product, not necessarily a single form factor. The Demartek SSD Deployment Guide provides a more thorough explanation of solid state storage form factors, data placement strategies such as caching and tiering, and other technical features about solid state storage.

The consumer electronics industry has fully embraced solid state storage, to such an extent that it is difficult to imagine purchasing a new mobile device such as smart phone, tablet computer or even an ultrathin laptop computer with a mechanical hard disk drive. The high volumes and generally decreasing prices for solid state storage make it practical and an obvious choice in the consumer marketplace. Enterprise datacenters are beginning to embrace solid state storage, but enterprise datacenter applications are a different use case than consumer applications. Technical and pricing challenges await resolution before widespread adoption in the enterprise becomes commonplace.

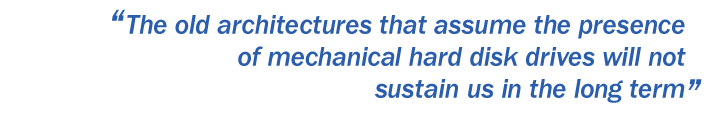

In my opinion, enterprise storage systems of the future need to be designed from the ground up with only non-volatile memory technologies in mind. The old architectures that assume the presence of mechanical hard disk drives will not sustain us in the long term. This design change also has impacts on software stacks. This is why all of the large companies who sell enterprise storage systems have made acquisitions within the last few years of smaller companies who are designing storage systems differently, including many “flash-only” storage systems, or are announcing completely new designs for storage systems. This is also why the some of the start-up companies are gaining traction in the market.

NAND flash is the first of the non-volatile memory (NVM) technologies that has practical use for storage of large quantities of data at electronic speeds. The current process technologies at 20 and 19 nm have plenty of room for growth. The emergence of triple level cell (TLC) provides additional possibilities for capacity growth at relatively low cost, as this technology is already being deployed in consumer applications. There are plenty of opportunities to apply error-correcting code (ECC) and other techniques to NAND flash technologies to compensate for the lower write cycles of the small die sizes. For example, researchers published an article last year in IEEE Spectrum titled “Flash Memory Survives 100 Million Cycles” describing how they found a way to apply heat to NAND flash cells to significantly extend their life, borrowing some techniques used in the production of phase change memory.

After NAND flash, there are other NVM technologies such as phase change memory (PCM), memristor, spin-transfer torque memory and others that show promise of becoming commercially viable in the next few years. But the keys to commercial viability lie in the scalable fabrication plants (“fabs”) and their life-cycle to drive relative capacity of components.

According to Jim Handy from Objective Analysis, the semiconductor cycle has run in predictable four-year cycles. These cycles have the highest impact on DRAM and NAND flash production, which can use separate fabs, and may extend to other NVM technologies as they become commercially viable. Jim says:

- “During profitable times all semiconductor manufacturers invest in additional capacity. Two years later there’s a glut and prices collapse causing losses.”

- “During a glut nobody invests. Two years later there’s a shortage that drives up profits.”

Economics drive the decision of when to build up new production capabilities, and what type of semiconductor product to build. The next investment cycle is expected to begin late this year or early next year, so the semiconductor companies are currently evaluating technologies and plans. These investment decisions must be made with a great deal of analysis, looking as far ahead into the future as possible, as fabs generally require an investment of at least $1 billion, with individual pieces of equipment costing hundreds of thousands, millions or tens of millions of dollars.

The big makers of NAND flash will not transition over to a production of a newer NVM technology until two criteria are met:

- The new technology provides a significant improvement over NAND flash in terms of performance, capacity, endurance, cost or some other characteristic.

- They are confident that a large enough sales volume will be generated that justifies the major investment required.

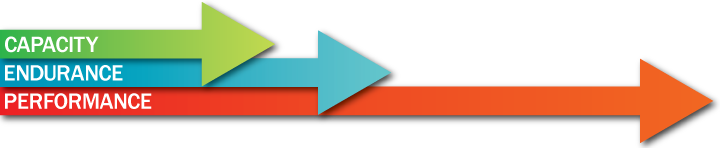

There are three basic dimensions of SSD technology: performance, endurance and capacity. When we measure performance of SSDs or HDDs in the Demartek Lab, we examine I/O operations per second (IOPS), throughput in megabytes per second (MBps) and round-trip transaction time, known as latency, measured in milliseconds or microseconds. Today’s SSDs have fantastic performance, relative to HDDs, delivering at least two orders of magnitude better in terms of IOPS, generally higher throughput and significantly lower latency. Reducing latency and providing predictable variance are the most important improvements for certain classes of database and web applications, even more than improving IOPS or throughput, and SSD technology meets this requirement.

It appears that SSDs deliver enough of a performance improvement over HDDs, that the SSD suppliers are now focusing their efforts on improving the other two dimensions of endurance and capacity. The engineers continue to make progress in both areas, and the current estimates for NAND flash technology are that advances can be made in both of these areas for the next 5-10 years.

We already see TLC used in larger capacity consumer devices today. It is quite possible that over time, TLC will be enhanced for both endurance and capacity enough to be suitable for enterprise use. Some of the newer NVM technologies may provide more than three bits per cell, further increasing capacity. We’re not there yet, but things are pointing in that direction.

It is my belief that in the not too distant future, enterprise customers will choose between all-flash (or other NVM technology) storage systems that are optimized for endurance (many writes) or all-flash systems that are optimized for capacity. We will see storage systems that have different tiers of flash where the tiers are not separated by performance, but by endurance and capacity, and this trend is already beginning. This choice is not only being driven by the capabilities of the technology, but also by the applications running in datacenters. It can be assumed that an all-flash storage system delivers fantastic performance, relative to today’s HDD-based systems, so application owners will need to consider endurance and capacity of storage devices and systems. Many of today’s datacenter applications require large amounts of capacity, while others perform a high number of writes.

Today’s enterprise-class SSDs have overtaken enterprise HDDs in terms of available capacity per device. Enterprise SSDs also consume less power than enterprise HDDs. Over the next few years as NAND flash technology is improved, especially in terms of available capacity, and other NVM technologies become commercially viable, it is quite possible that this type of technology could be used for online archiving, replacing other mechanical storage types such as HDDs and magnetic tape.

Although SSDs were an option in at least one enterprise storage array as early as 2008, I’m using the year 2010 as the beginning of this transition from HDDs to SSD technology. SSDs existed before this date, but interesting consumer devices such as the Apple iPad were introduced that year that began to drive up demand and volume production of SSD technology. Demartek also made a small but strategic decision involving SSDs during that year that is still paying dividends. I expect this industry transition to take 20-25 years, similar to the transition from horse-drawn carriages to automobiles.

During this transition period, we will see a number of hybrid solutions, that combine different technologies such as NAND flash and HDDs, NAND flash and DRAM, and other combinations in a variety of forms. We will also see several types and speeds of interfaces used for these SSDs, both inside the server and external to the server. Based on our lab test results, I have been saying for some time that SSDs and high-speed interfaces were made for each other.

I believe that this transition will take this long for three reasons. First, the industry needs enough time to develop and refine non-volatile memory hardware and software technologies to improve the endurance, capacity and reliability levels required for both consumer and enterprise applications. Second, there is a huge amount of existing investment in hard disk drive technology and infrastructure that won’t be discarded anytime soon. Third, it takes some time for a large number of humans to fully embrace a new technology. During this transition time, there will be a great deal of challenge and opportunity, which I welcome.

We are pleased to announce that Principled Technologies has acquired Demartek.

We are pleased to announce that Principled Technologies has acquired Demartek.