Demartek Publishes Article on RDMA Storage Systems and Latency

April 2016

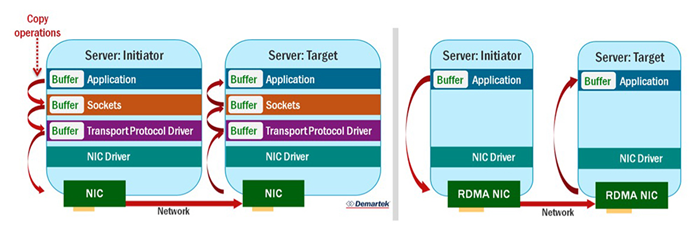

Remote Direct Memory Access technology enables more direct movement of data in and out of a server. The technology can be implemented for networking and storage applications. RDMA storage systems bypass normal system software network stack components such as a cache or operating system, as well as the multiple buffer copy operations they normally perform. This reduces overall CPU utilization and improves the latency of the host software stack, since fewer instructions are used to complete a data transfer. Some RDMA adapters may even have offload functions built into them.

RDMA storage networking protocols include iSCSI block protocols, and NFS or SMB (formerly known as CIFS) file protocols. RDMA can improve storage latency and reduce the CPU utilization of the host server performing I/O requests. This allows a higher rate of I/O requests to be sustained or a smaller server to perform the same rate of I/O requests. This can make RDMA storage networking valuable in environments that can tolerate very little latency such as supercomputing environments and some database workloads.

InfiniBand uses RDMA natively. RDMA can be added to Ethernet by using special adapters. With the addition of faster

gigabit Ethernet speeds in 2016, RDMA over Ethernet may be useful to further reduce overhead.

The complete article is available on the SearchSolidStateStorage web site.

Update: View the Demartek RoCE Deployment Guide.