Demartek NVMe over Fabrics Rules of Thumb

NVMe over Fabrics and the Oversubscription Problem

August 2017

By Dennis Martin, Demartek President

By Dennis Martin, Demartek President

During two of my presentations at the Flash Memory Summit 2017 earlier this month, I discussed the Demartek NVMe over Fabrics (NVMe-oF) rules of thumb. Although I summarized these on one slide, here is a bit more explanation.

NVMe over Fabrics provides a way to transmit the NVMe block storage protocol over a range of storage networking fabrics. This brings the benefits of storage area networking (SAN) technology to NVMe that include scaling out to large numbers of NVMe devices and communicating with these devices over datacenter distances (up to dozens or possibly hundreds of meters). The supported fabrics today include RDMA (InfiniBand, RoCE, iWARP) and Fibre Channel, and the NVMe-oF specification is designed to support other fabrics that may be developed in the future.

One of those future fabrics is NVMe-TCP which will enable the use of NVMe-oF over existing Datacenter IP networks. There were some technology demonstrations of NVMe-TCP at this year’s Flash Memory Summit, and NVMe-TCP will be included in the next revision of the NVMe-oF specification, version 1.1, which is expected in 2019.

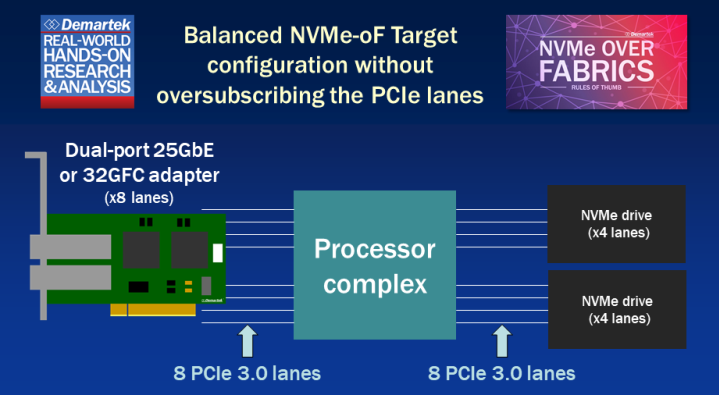

In our NVMe-oF testing of both RDMA (RoCE) and Fibre Channel fabrics, we’ve noticed that bottlenecks can appear in the storage target if the available bandwidth of the fabric adapters is not balanced with the performance of the NVMe drives inside the storage target. Let’s consider a simple example to explain what we mean by balance in this context.

Before we get into the details, we need to remember two important factors that apply to both the NVMe drives and the fabric (network) adapters: the number of PCIe lanes required for the device and the maximum throughput rate of the device.

Suppose a storage target has two typical enterprise NVMe drives. Each of these NVMe drives are PCIe 3.0 x4, meaning that there is approximately 4 gigabytes/second (4 GBps) of available bandwidth provided to each drive by the PCIe bus. Combined, these two NVMe drives require eight PCIe 3.0 lanes. Let’s further suppose that each of these particular NVMe drives have a maximum sequential read speed of 3100 MB/second (~ 3.03 GBps).

Now let’s examine the networking part of this NVMe-oF target that has two NVMe drives. Let’s consider a dual-port adapter for an Ethernet RDMA fabric (RoCE or iWARP) and a Fibre Channel fabric. A dual-port 25 Gbps Ethernet adapter requires a PCIe 3.0 x8 slot. A dual-port 32 Gbps Fibre Channel adapter also requires a PCIe 3.0 x8 slot. So, two ports of either type of adapter require eight PCIe 3.0 lanes.

The Ethernet adapter runs at 25 Gbps and the Fibre Channel adapter runs at 32 Gbps. Let’s factor in the typical encoding scheme used for these adapters, which is 64b/66b. This means that for every 64 bits of data placed on the wire there are two bits of command and control for the connection. To convert from the raw line rate in bits, we divide by eight (number of bits in one byte), then multiply by the encoding scheme to get the maximum possible throughput rate. For an encoding scheme of 64b/66b, the multiplication factor is 0.969696 (approximately 3% overhead).

Performing the calculations described above, we find that a 25 Gbps adapter can support a maximum effective data rate of 3.03 GBps or 3103 MBps (3.03 x 1024). The same calculation for a 32 Gbps connection yields a maximum throughput rate of 3.87 GBps or 3971 MBps.

If an NVMe-oF storage target is designed to handle maximum possible throughput without oversubscription

in any location in the architecture, then the following observations apply:

If an NVMe-oF storage target is designed to handle maximum possible throughput without oversubscription

in any location in the architecture, then the following observations apply:

- Two NVMe PCIe 3.0 x4 devices require eight PCIe 3.0 lanes.

- A dual-port 25GbE or 32GFC adapter requires eight PCIe 3.0 lanes.

- A total of 16 PCIe 3.0 lanes in the storage target are required to support two NVMe drives and one dual-port adapter (of the types described above).

- There is approximately a one-to-one relationship between the number of NVMe drives (PCIe 3.0 x4) and the number of fabric ports (25GbE or 32GFC) required for a balanced configuration.

There is a minor exception to the fourth bullet point above. For NVMe drives that exceed ~ 3100 MBps throughput rates, the 25GbE adapter port will be a bottleneck, but the 32GFC adapter will not be a bottleneck until the NVMe drive throughput rate exceeds ~ 3970 MBps.

If the encoding scheme of the adapter were the same as the PCIe 3.0 bus, which is 128b/130b, then the encoding scheme multiplication factor would be 0.9846 (~ 1.5% overhead) which would add about 50 MBps to the maximum throughput rate of the 25GbE adapter and about 60 MBps to the 32GFC adapter.

Let’s extend our maximum possible throughput scenario to larger configurations. To support ten NVMe drives and ten network ports (either 25GbE or 32GFC) without oversubscription in a storage target, 80 PCIe 3.0 lanes would be required (40 for the NVMe drives and 40 for the adapters). To support 24 NVMe drives and 24 network ports without oversubscription would require 192 PCIe 3.0 lanes.

The number of PCIe lanes supported by server processors becomes an important factor when building an NVMe-oF storage target. The processors used in an NVMe-oF target must have enough processing power to support all the activity and data movement of the NVMe drives and network adapters in addition to providing storage services and management functions.

A reasonable objection to these observations is that it is unlikely that real-world application workloads using an NVMe-oF storage target would run 100% large-block, sequential read operations, but would more likely be a mix of read and write operations and would use various block sizes. In these real-world cases, it is not likely that the NVMe drives or fabric adapters would be stressed to maximum throughput levels on a sustained basis. In some cases, there may be more pressure on IOPS, especially for smaller block size I/O.

Given the data that we have provided, vendors of NVMe-oF storage targets may advertise their oversubscription factor, or at least disclose it if asked. We may see oversubscription factors of 2:1 or 3:1 in these targets, and that PCIe switches will be included in the designs of these systems.

All of this will change when PCIe 4.0 becomes available, and again later when PCIe 5.0 becomes a reality. These rules of thumb also change as NVMe drives become available that use more lanes, such as PCIe 3.0 x8 or PCIe 3.0 x16.

Our Storage Interface Comparison page provides additional detail on encoding schemes, PCIe 4.0, PCIe 5.0 and various storage interfaces.

We are pleased to announce that Principled Technologies has acquired Demartek.

We are pleased to announce that Principled Technologies has acquired Demartek.